This page was generated from notebooks/L12/2_reinforcement_learning.ipynb.

You can directly download the pdf-version of this page using the link below.

download

Machine Learning and Neural Networks#

We are close to the end of the course and covered different applications of Python to physical problems. The course is not intended to teach the physics, but exercise the application of Python. One field, which is increasingly important also in physics is the field of machine learning. Machine learning is the summarizing term for a number of computational procedures to extract useful information from data. We would like to spend the rest of the course to introduce you into a tiny part of machine learning. We will do that in a way that you calculate as much as possible in pure Python without any additional packages.

[20]:

import numpy as np

import matplotlib.pyplot as plt

from scipy.constants import *

from scipy.sparse import diags

from scipy.fftpack import fft,ifft

from scipy import sparse as sparse

from scipy.sparse import linalg as ln

from time import sleep,time

import matplotlib.patches as patches

from ipycanvas import MultiCanvas, hold_canvas,Canvas

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

# default values for plotting

plt.rcParams.update({'font.size': 16,

'axes.titlesize': 18,

'axes.labelsize': 16,

'axes.labelpad': 14,

'lines.linewidth': 1,

'lines.markersize': 10,

'xtick.labelsize' : 16,

'ytick.labelsize' : 16,

'xtick.top' : True,

'xtick.direction' : 'in',

'ytick.right' : True,

'ytick.direction' : 'in',})

Overview#

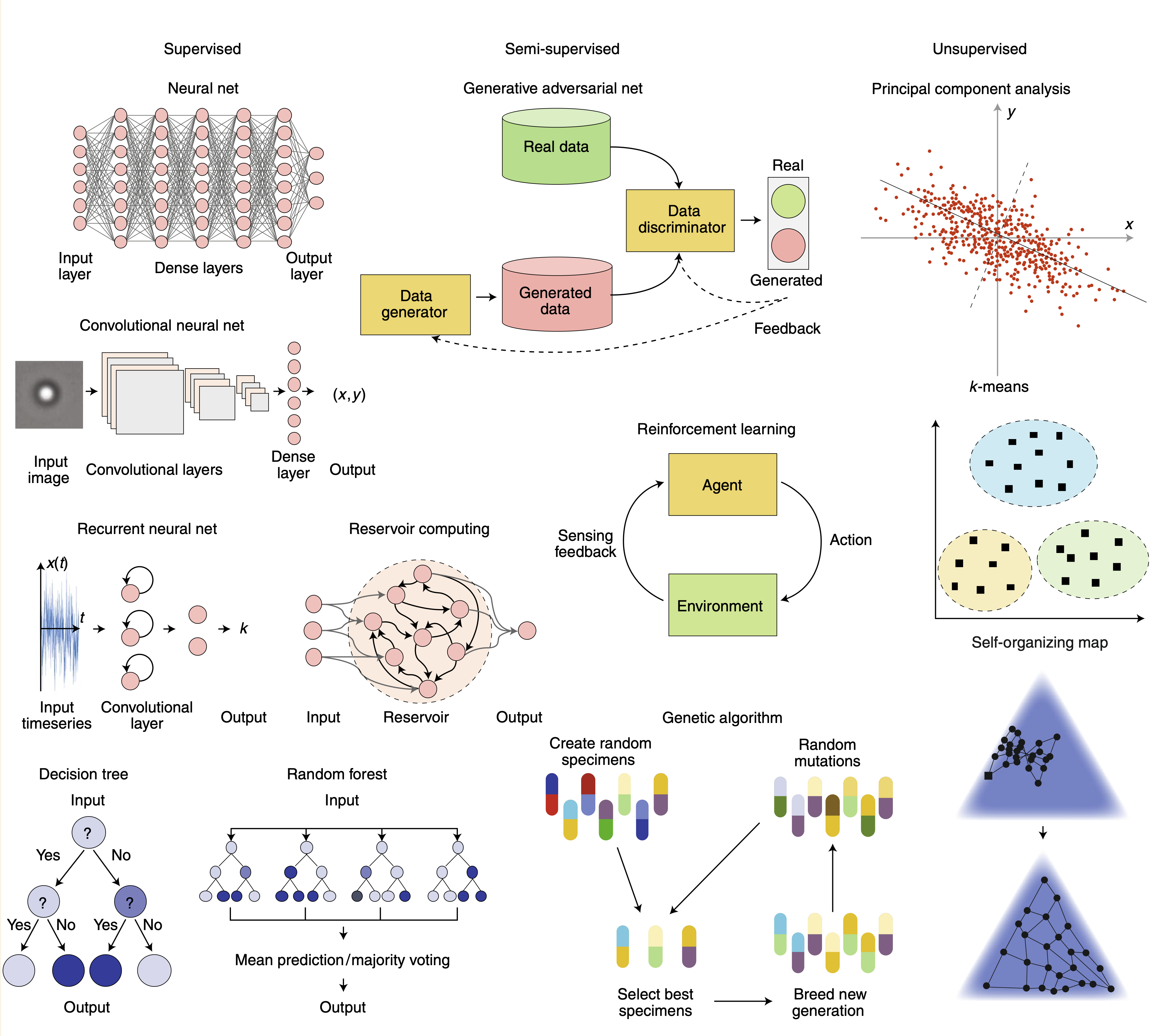

Machine learning has its origins long time ago and many of the currently very popular approaches have been developed in the past century. Two things have been stimmulating the current hype of machine learning techniques. One is the computational power that is available already at the level of your smartphone. The second one is the availability of data. Machine learning is divided into different areas, which are denotes as

supervised learning: telling the system what is right or wrong

semi-supervised learning: having only sparse information on what is right or wrong

unsupervised learning: let the system figure out what is right or wrong

The graphics below gives a small summary. In our course, we cannot cover all methods. We will focus on Reinforcement Learning and Neural Networks just to show you, how things could look in Python.

Image taken from F. Cichos et al. Nature Machine Intelligence (2020).

Reinforcement Learning#

Reinforcement learning is the process of learning what to do—how to map situations to actions—in order to maximize a numerical reward signal. Unlike most forms of machine learning, the learner or agent is not told which actions to take; instead, it must discover which actions yield the most reward by trying them. In the most interesting and challenging cases, actions may affect not only the immediate reward but also the next situation and, through that, all subsequent rewards. These two characteristics—trial-and-error search and delayed reward—are the most important distinguishing features of reinforcement learning.

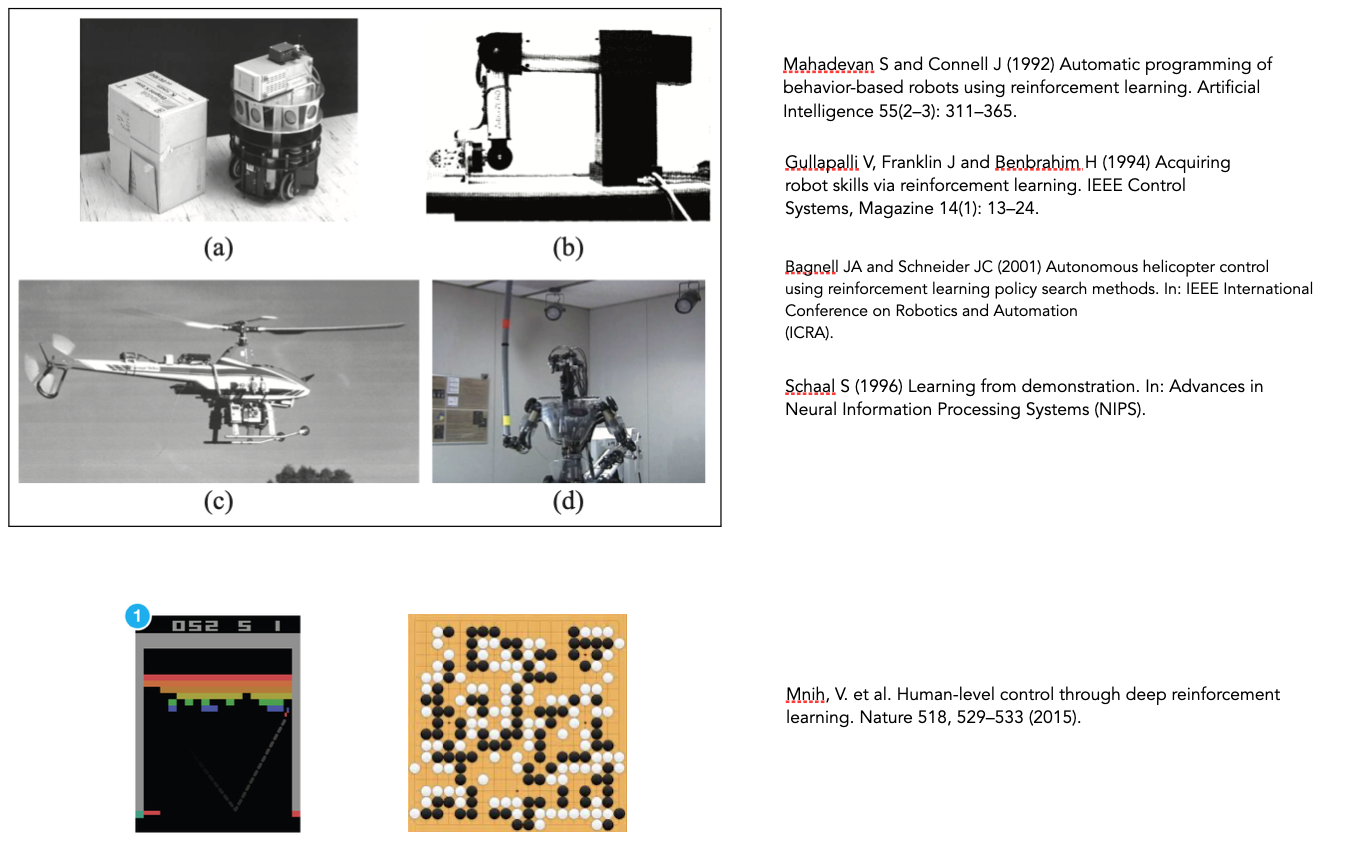

It has been around since the 1950s but gained momentum only in 2013 with the demonstrations of DeepMind on how to learn play Atari games like pong. The graphic below shows some of its applications in the field of robotics and gaming.

Markov Decision Process#

The key element of reinforcement learning is the so-called Markov Decision Process (MDP). The Markov Decision Process denotes a formalism for planning actions in the face of uncertainty. Formally, an MDP consists of:

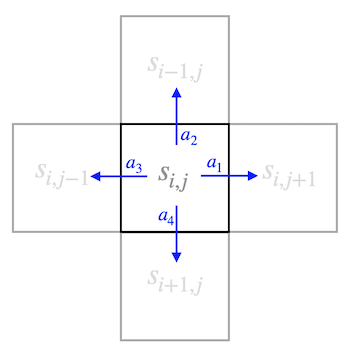

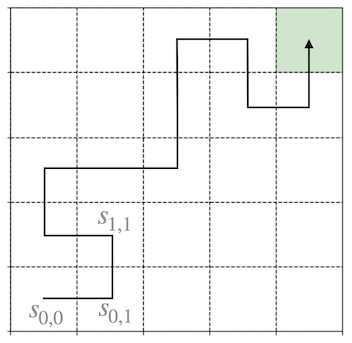

\(S\): a set of accessible states in the world

\(D\): an initial distribution over states

\(P_{sa}\): transition probability between states

\(A\): a set of possible actions to take in each state

\(\gamma\): the discount factor, which is a number between 0 and 1

\(R\): a reward function

We begin in an initial state \(s_{i,j}\) drawn from the distribution \(D\). At each time step \(t\), we must pick an action, for example \(a_1(t)\), as a result of which our state transitions to some state \(s_{i,j+1}\). The states do not necessarily correspond to spatial positions; however, when we discuss the gridworld later, we may use this example to understand the procedures.

By repeatedly picking actions, we traverse a sequence of states:

Our total reward is then the sum of discounted rewards along this sequence of states:

Here, the discount factor \(\gamma\), which is typically strictly less than one, causes rewards obtained immediately to be more valuable than those obtained in the future.

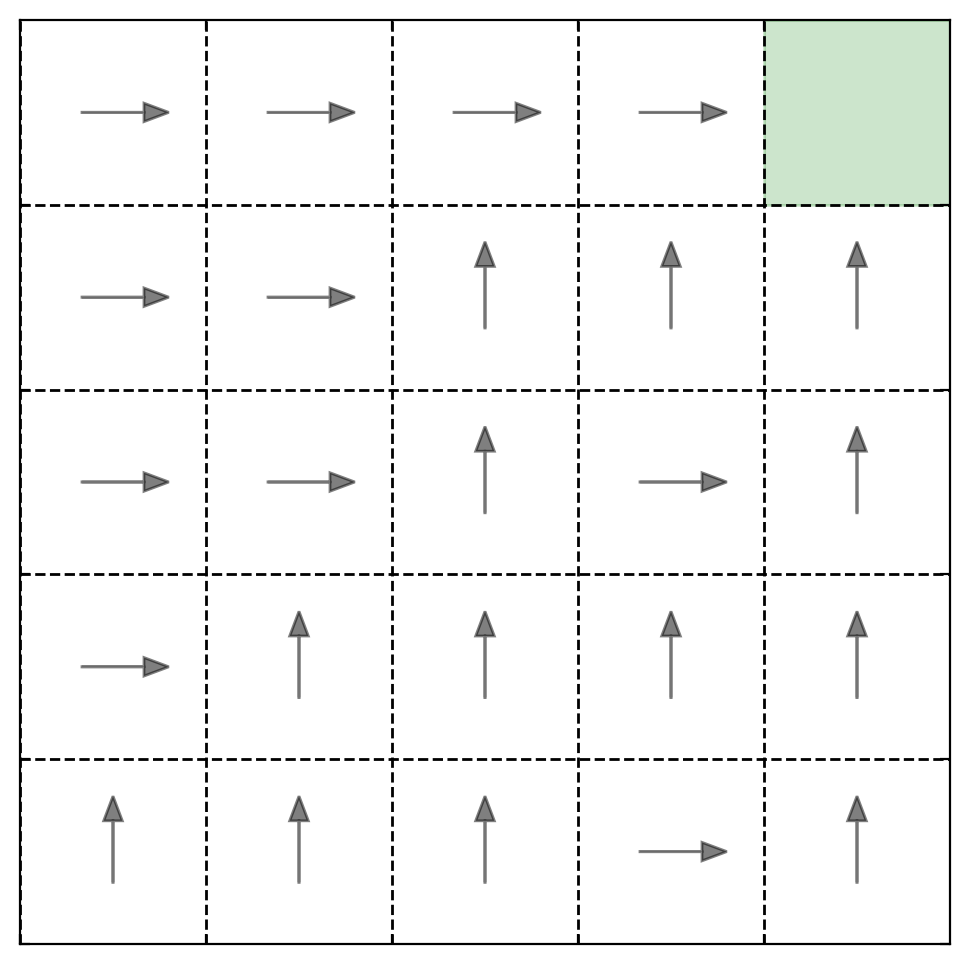

In reinforcement learning, our goal is to find a way of choosing actions \(a_0, a_1, \ldots\) over time to maximize the expected value of the rewards. The sequence of actions that realizes the maximum reward is called the optimal policy \(\pi^{*}\). A sequence of actions, in general, is called a policy \(\pi\).

Methods or RL#

There are different methods available to find the optimal policy. If we know the transition probabilities \(P_{sa}\), the methods are called model-based algorithms. One such method is the value iteration procedure, which we will not consider here.

If we don’t know the transition probabilities, it is referred to as model-free RL (reinforcement learning). We will examine one of these model-free algorithms, Q-learning.

In Q-learning, the value of an action in a state is measured by its Q-value. The expected value \(E\) of the rewards for an initial state and action under a given policy is the Q-function or Q-value:

This might sound complicated, but it is, in principle, straightforward. There is a Q-value for all actions in each state. Thus, if we have 4 actions and 25 states, we need to store a total of 100 Q-values.

For the optimal sequence of actions – the best way to proceed – this Q-value becomes a maximum:

The policy that provides the sequence of actions to achieve the maximum reward is then calculated by:

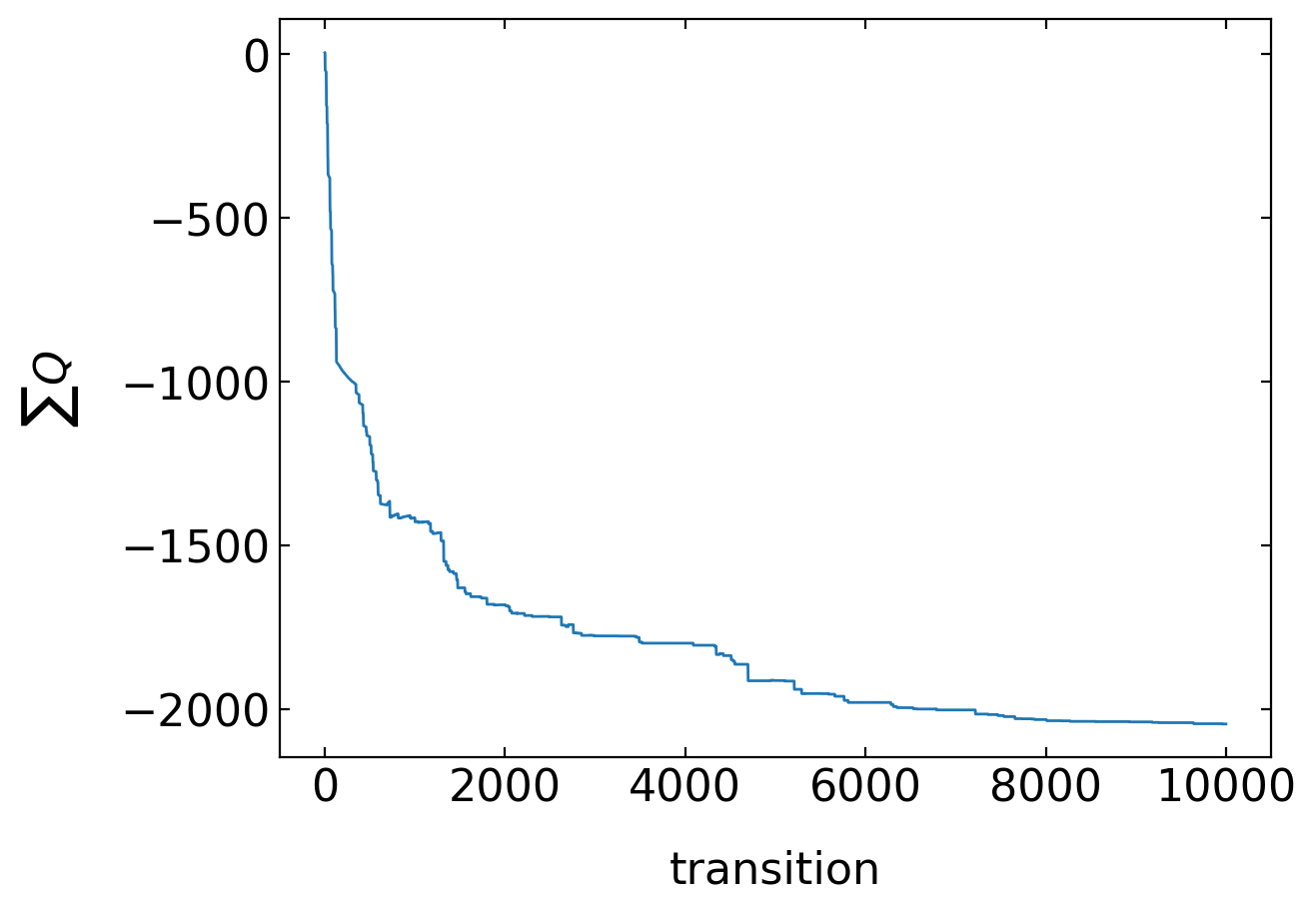

The Q-learning algorithm is an iterative procedure for updating the Q-value of each state and action, which converges to the optimal policy \(\pi^{*}\). It is given by:

This states that the current Q-value of the current state \(s\) and the taken action \(a\) for the next step is calculated from its current value \(Q_t(s,a)\) plus an update value. This update value is calculated by multiplying the learning rate \(\alpha\) with the reward \(R\) obtained when taking the action, plus a discounted value (discounted by \(\gamma\)) when taking the best action in the next state \(\gamma \max_{a'}Q_t(s',a')\). This is the procedure we would like to explore in a small Python program, which is not too difficult.

Where to go from here#

If you want to know more about Reinforcement Learning, have a look at the book of Sutton and Barto.